This guide covers the importance of data in the business world and the increasing need for an understanding of how businesses rely on data.

A modern company relies on data to understand customer transactions. In fact, Google Trending now shows searches for big data exceeding those for cloud computing. The majority of executives agree that if they could harness all of their data, they’d be much stronger businesses. And, while the threshold for what constitutes big data varies from company to company, organizations of all types are seeking ways to unlock additional value from the data most relevant to them. This guide offers an introduction to the big data landscape as well as an overview of the importance of big data in business.

Today, it is not uncommon to see data described as the ‘currency’ of the digital economy, suggesting a level of importance and value exponentially higher than in previous times.

Data volumes that were until recently measured in terabytes are now measured in zettabytes (1 billion terabytes). The research consultancy IDC estimates the volume of world data will reach 3.6 zettabytes in 2013, and will keep doubling approximately every two years.

Taken together, these factors have ushered in the era of data. And, the aptly named concept of big data emerges when data reaches extreme sizes, speeds and types.

78% of Executives Agree

"If we could harness all of our data, we’d be a much stronger business"

While the threshold for what constitutes big data varies from company to company, organizations of all types will seek ways to unlock additional value from the data most relevant to them, be it on a large scale or a small scale. In many ways, big data has become a proxy for data initiatives in general. As such, the big data trend will present a range of direct and indirect business opportunities for IT channel partners with the right mix of technical skill and business savvy.

As with many emerging technologies, sizing the big data market can be challenging. Big data spans the hardware, software, and services segments of the market, which means a degree of uncertainty will inevitably exist in how revenue gets classified.

With that caveat, IDC projects the big data market to grow nearly 32% annually (CAGR), reaching $23.8 billion worldwide by 2016. In comparison, Gartner expects big data to drive $34 billion in IT spending in 2013, up from $28 billion in 2012. The online big data community Wikibon provides similar forecasts, along with an estimate for the market break-down, which is as follows: 41% hardware, 39% services, and 20% software.

To put the preceding big data revenue figures into context, the market sizes for categories such as storage, data centers, business intelligence software and services are significantly larger than estimates for the big data market. Over time, increasing portions of these categories may be allocated to big data.

The list of players in the big data space continues to expand. A number of pure-play big data startups and a broad array of diversified portfolio vendors form the big data vendor universe. See Additional Resources section for more details.

When it comes to definitions and outlook, emerging technology trends rarely produce immediate consensus. Even mature IT trends can continue to generate debate because of varying interpretations.

In the case of big data, industry pundits have begun to describe the concept in the context of three defining elements.

Big Data Definition: a volume1, velocity2 and variety3 of data that exceeds an organization’s storage or compute capacity for accurate and timely decision-making (Source: The MetaGroup).

There are many variations of this definition and many thresholds for what constitutes big data, but this form of definition generally provides a good starting point for any big data discussion.

Data volumes continue to rise due to more sources of digital data creation, as well as larger file sizes such as highdefinition video, MRI scans or IP-based voice traffic. Setting a specific volume requirement for big data is difficult since data volumes change over time and are relative. A data set may be considered ‘big’ for one organization and ‘small’ for another. With those caveats in mind, Deloitte uses an estimate of around 5 petabytes as a threshold for big data. To put this into context, McKinsey calculated that companies with more than 1,000 employees stored an average of 200 terabytes in 2010 (1 petabyte = 1,000 terabytes). A 2011 survey by Information Week Analytics found 9% of companies having 1 petabyte or more of storage under active management. These results indicate the Deloitte threshold applies to a relatively small pool of companies, although that is rapidly changing.

Full Speed Ahead

Businesses are swimming in data and need help managing it

Big Data is a hot topic, so plenty of receptive customers

Many ‘low hanging fruit’ data opportunities

Data initiatives typically span multiple departments, so often more budget to work with

Not so Fast

After scratching the surface, lots of customer and channel confusion surrounding big data

Many customers must first master ‘small data’ before even considering a big data initiative

Coordination across departments and functional areas may inhibit adoption and utilization

Developing a skilled workforce with big data expertise and experience will take time

Velocity of data refers to the speed with which data is produced, collected, or monitored. Some companies may rapidly produce their own data, such as a medical imaging company or a stock exchange, while others may have a need to collect or monitor third-party data sources that are rapidly generated, such as weather or traffic streams. As Gartner points out, the velocity component is often variable, with short bursts of high-velocity, high-volume data, followed by lulls. For companies operating under this scenario, scalable infrastructure is a must.

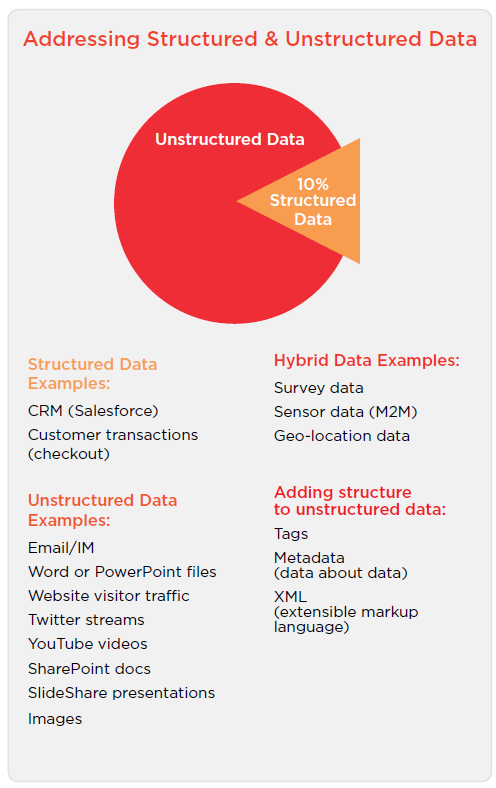

Variety of data refers to data type, which is typically categorized as either structured or unstructured data. Structured data refers to data stored in defined fields within a database record. Unstructured data is basically everything else, ranging from documents and presentation files to images, video and Twitter streams. IDC estimates 80% of enterprise data created falls into the unstructured data category, while 90% of the total digital universe involves unstructured data.

Since the early days of databases, sequential query language (SQL) has been vital to the storing and managing of data. Developed by IBM in the 1970s and popularized by Oracle as part of its commercially-available database systems, SQL has a long history and is well-known among developers for its ability to operate on centrally managed database schemas and indexed data. However, there are also limits to SQL. As data volumes grow, the architecture of SQL applications that operate on monolithic relational databases becomes untenable. Furthermore, the variety of data being collected no longer fit into standard relational schemas. Two classes of data management solutions have cropped up to address these issues: NewSQL and NoSQL.

NewSQL allows developers to utilize the expertise they have built in SQL interfaces, but directly addresses scalability and performance concerns. SQL systems can be made faster by vertical scaling (adding computing resource to a single machine), while NewSQL systems are built to improve performance of the database itself or to take advantage of horizontal scaling (adding commodity machines to form a pool of resources and allow for distributed storage and content). Along with maintaining a connection to the SQL language, NewSQL solutions allow companies to process transactions that are ACID-compliant.

Although scalability and performance are addressed with NewSQL, there is still the issue with data that is not easily captured by relational databases. This type of data, known as unstructured data, can include email messages, documents, audio/video files, and social streams. To handle unstructured data, many firms are turning to NoSQL solutions. Like NewSQL, NoSQL applications come in many flavors: document-oriented databases, key value stores, graph databases, and tabular stores. The foundation for most NoSQL applications is Hadoop. Hadoop is an open source framework that acts as a sort of platform for big data—a lower-level component that acts as a bridge between hardware resources and end user applications. Hadoop consists of two primary components: 1). The Hadoop Distributed File System (HDFS) and 2). The MapReduce Engine.

Data is stored in the Hadoop Distributed File System (HDFS),

which allows for storage of large amounts of data over a

distributed pool of commodity resources. This removes the

requirement of traditional relational databases to have all

the data centrally located. The MapReduce engine, utilizing

parallel processing architecture, provides the capability

to sort through and analyze massive amounts of data in

extremely short timeframes. The ability of Hadoop to spread

data and processing over a wide pool of resources makes it

appealing for firms with big data needs. For example, Twitter

users generate 12 TB of data per day—more data than can be

reliably written to a single hard drive for archival purposes.

Twitter uses Hadoop to store data on clusters, then employs a

variety of NoSQL solutions for tracking, search, and analytics.

Data is stored in the Hadoop Distributed File System (HDFS),

which allows for storage of large amounts of data over a

distributed pool of commodity resources. This removes the

requirement of traditional relational databases to have all

the data centrally located. The MapReduce engine, utilizing

parallel processing architecture, provides the capability

to sort through and analyze massive amounts of data in

extremely short timeframes. The ability of Hadoop to spread

data and processing over a wide pool of resources makes it

appealing for firms with big data needs. For example, Twitter

users generate 12 TB of data per day—more data than can be

reliably written to a single hard drive for archival purposes.

Twitter uses Hadoop to store data on clusters, then employs a

variety of NoSQL solutions for tracking, search, and analytics.

These NoSQL solutions are flooding the marketplace for multiple reasons. First, the nature of Hadoop as a platform means that it is not well-suited for direct use by most end users. Creating jobs for the MapReduce engine is a specialized task, and applications built on the Hadoop platform can create interfaces that are more familiar to a wide range of software engineers. Secondly, applications built on the Hadoop platform can more directly address specific data issues. Two of Twitter’s tools are Pig (a high-level interface language that is more accessible to programmers) and HBase (a distributed column-based data store running on HDFS). Another popular tool is Hive, a data warehouse tool providing summarization and ad hoc querying via a language similar to SQL, which allows SQL experts to come up to speed quickly.

According to IDC, the Hadoop market is growing 60% annually (CAGR). However, like big data itself, Hadoop is still in its early stages. Analysis suggests many early adopters of Hadoop are using it primarily for storage and ETL (Extract, Transform, and Load) purposes. Getting to large-scale analytical and data exploration exercises will take time and progress along the learning curve.

Keep in mind, although Hadoop is able to speed up certain data operations related to storage or processing, it is not ideally suited for real-time analysis since it is designed to run batch jobs at regular intervals. Some experts believe that real-time analysis will be the most difficult challenge to solve as companies begin initiatives. Many areas of a system could act as a bottleneck, and many NewSQL and NoSQL tools that have been developed to handle scalability or unstructured data also improve transaction time at the software level. Other tools, such as the Cassandra database and Amazon Dynamo storage system or Google’s BigQuery, replicate some of Hadoop’s features but seek to improve the real-time analysis capability, as well as ease of use.

Note: In late May 2013, Hadoop 2.0 was released. According to the release manager, Arun Murthy, Hadoop 2.0 includes a significant upgrade of the MapReduce framework to Apache YARN. This helps move Hadoop away from ‘one-at-time’ batch-oriented processing to running multiple data analysis queries or processes at once.

Given the distributed nature of big data systems, cloud solutions are appealing options for companies with big data needs, especially those companies who perceive a new opportunity in the space but do not own a large amount of infrastructure. However, cloud solutions also introduce a number of new variables, many of which are out of the customer’s control. Users must ensure that the extended network can deliver timely results, and companies with many locations will want to consider duplicating data so that it resides close to the points where it is needed. The use of cloud computing itself can create tremendous amounts of log data, and products such as Storm from the machine data firm Splunk seek to address this joining of fields. An April 2012 Forbes article described Splunk as “Google for machine data.”

Companies on the leading edge of the big data movement, then, are finding that there is no one-size-fits-all solution for storage and analytics. Standard SQL remains sufficient for certain operations, and both NewSQL and NoSQL solutions have specialized benefits that make them worthwhile. As products continue to evolve (such as NewSQL systems handling unstructured data or NoSQL systems supporting ACID), the classifications may fade in importance as specific solutions match up with specific problems. With data streams constantly flowing from various sources, there are opportunities to improve services or trim costs by rapidly digesting and analyzing the incoming data. As products evolve and are introduced to meet needs, there will also be a growing need for education, product selection, and services surrounding big data initiatives.

While most businesses embrace a data-driven decision-making mindset, few are actually well positioned to do so. Even fewer can claim to be anywhere near the point of engaging in a big data initiative.

According to CompTIA research, slightly less than 1 in 5 companies report being exactly where they want to be in managing and using data. Granted, this represents a high bar, but even when including those ‘very close’ to their target, it still leaves a majority of businesses with significant work to do on the data front.

Wasted time that could be spent in other areas of the business

Inefficient or slow decision-making / Lack of agility

Internal confusion over priorities

Reduced margins due to operational inefficiencies

Inability to effectively assess staff performance

Moreover, the research suggests many business and IT executives haven’t fully ‘connected the dots’ between developing and implementing a data strategy and its affect on other business objectives, such as improving staff productivity, or developing more effective ways to engage with customers.

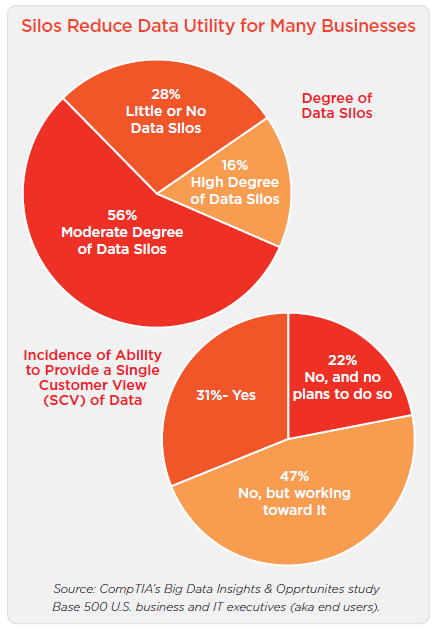

With inexpensive storage and the proliferation of applications to capture and manage data, businesses have freely built out their data repositories and related applications. Over time, many organizations found themselves with dozens or even hundreds of different databases or data repositories. Few of these applications or systems were designed with interconnectivity in mind, resulting in data silos.

CompTIA research found nearly 3 in 4 companies reporting a high

or moderate degree of data silos within their organization. At a

basic level, it may result in a loss of productivity, such as a situation

where a salesperson needs to navigate a range of applications, such

as a CRM, finance, email marketing campaign, web analytics, social

media monitoring and project management to get an accurate

picture of a customer’s likelihood to renew a contract or up their

order. At a more serious level, it may involve catastrophic loss of

money or life, such as a situation where a hospital does not have

a complete medical history of a patient because of critical data

spread over multiple, unconnected databases.

CompTIA research found nearly 3 in 4 companies reporting a high

or moderate degree of data silos within their organization. At a

basic level, it may result in a loss of productivity, such as a situation

where a salesperson needs to navigate a range of applications, such

as a CRM, finance, email marketing campaign, web analytics, social

media monitoring and project management to get an accurate

picture of a customer’s likelihood to renew a contract or up their

order. At a more serious level, it may involve catastrophic loss of

money or life, such as a situation where a hospital does not have

a complete medical history of a patient because of critical data

spread over multiple, unconnected databases.

Based on a self-assessment, only 16% of companies consider their use of data analysis, mining and BI to be at the advanced level. In contrast, 30% put their firm at the basic level.

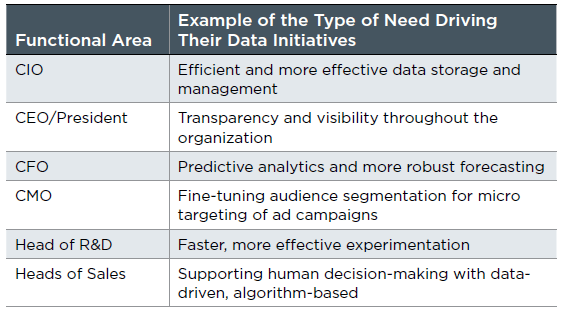

Because data now permeates every functional area of an organization, data-related initiatives may originate from any number of executives or line-of-business (LOB) departments. Past CompTIA research has consistently shown an increase in technology purchases, both authorized and unauthorized, taking place outside the IT department. BYOD, cloud computing (especially software-as-a-service) and the general democratization of technology have been contributing factors in this trend.

In the case of data initiatives, the CIO or IT department remains a primary catalyst, accounting for about half of project starts. The remainder originate with the president, CEO, CFO or other line of business executive. For vendors and IT solution providers working in the data space, the takeaway is clear: be prepared to have a multifaceted customer engagement involving technical and business objective problem-solving with staff across many functional areas.

Real-time analysis of incoming data

Customer profiling and segmentation analysis

Email marketing campaign effectiveness

Predictive analytics to forecast sales and other trends

Metrics and Key Performance Indicators (KPIs)

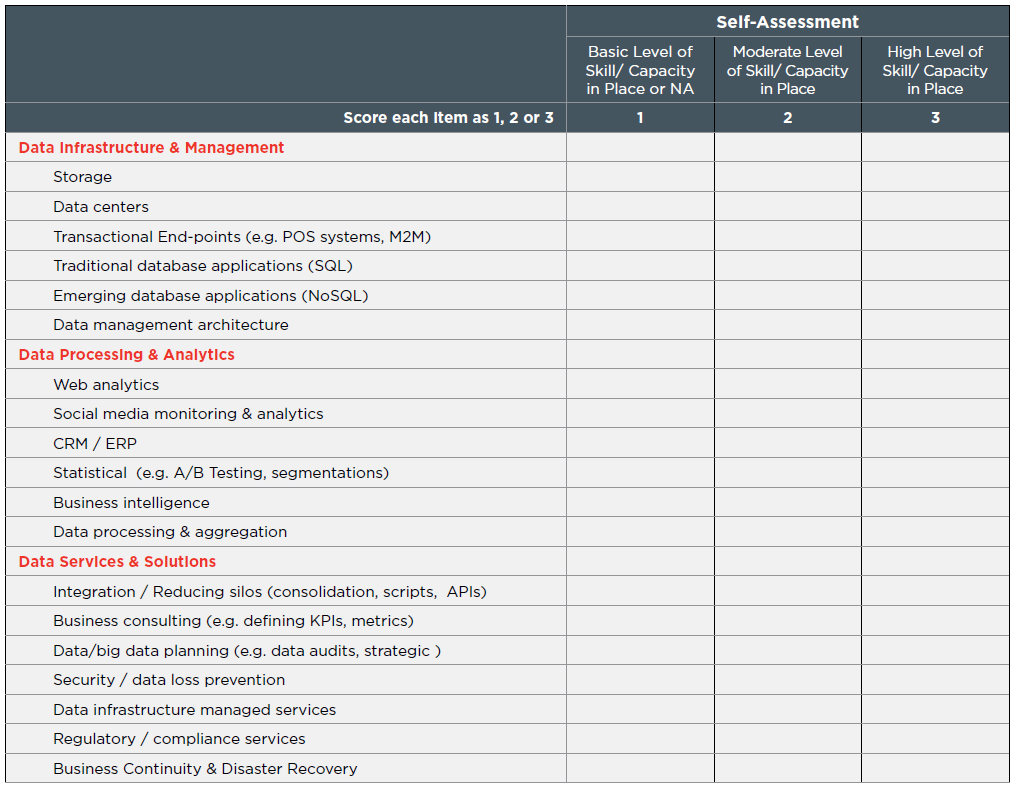

The Self Assessment: What are your firm’s current capabilities related to big data?

The aforementioned self-assessment will help determine possible entry points into the big data space. Note: the self-assessment is not designed to provide a specific recommendation based on scoring, but rather the intent is to provide a framework for evaluating possible opportunities. Two types of roadmaps exist for most firms: 1). Expansion within a segment of big data, or 2). Expansion into an adjacent segment of big data. Consider the following examples:

Example 1: an IT solution provider rates highly with skill/capacity

in the areas of storage and datacenter management, which fall

into the data infrastructure and management component of

big data. Expansion within this segment may entail developing

expertise with data management architecture and database

applications. This may include facilitating a data audit for a

customer to establish a data map, followed by strategies to

address database silos. CompTIA research found that 67% of

customers reported some degree of rogue data repositories

within their organization, so a data audit is always a good first

step. Additionally, the solution provider may consider adding-on business continuity/disaster recovery services, which may be

thought of as an indirect big data opportunity. See Additional

Resources section of this document for examples of CompTIA

Channel Training that support indirect big data offerings.

Example 2: an IT solution provider rates highly with skill/capacity in the areas of storage and datacenter management. Expansion into an adjacent segment of big data may entail developing capabilities around data processing and aggregation, web analytics, or social media analytics. In many situations, customers will attempt the DIY approach when it comes to web and social media analytics. Some will succeed, while others will struggle with integration, or the need to aggregate different data sources, analyze it holistically, while all in real-time. IT solution providers able to develop data integration expertise will find themselves well positioned to win business.

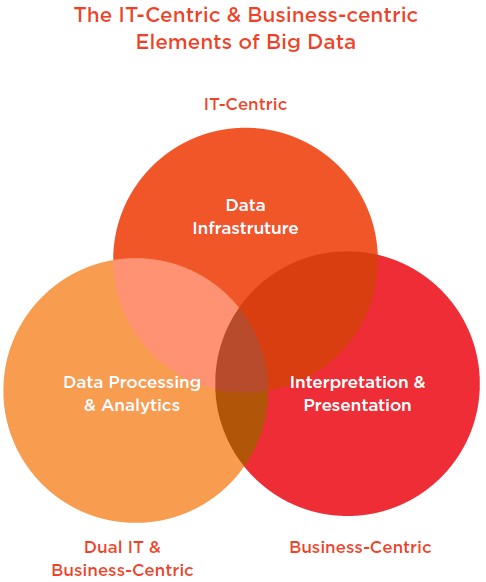

Perhaps more so than other segments of the IT industry, entering the big data space requires more than just IT knowhow. “In todays world knowing the customer’s line of business and how to work with the data [and] systems associated with that line of business is everything. Just knowing “computer stuff” may get you in the door, but to be really valuable you have to hold their lives in your hands”, says Brian Kerhin, president and founder of Byte Harmony, Inc , an IT solution provider with offices in Milwaukee and Pittsburgh. While there are opportunities for firms that want to focus on the IT-centric components of the big data market, providing customers a total solution requires an equal level of expertise on the business-centric side.

With that said, it’s unrealistic to have an expectation of needing or wanting to develop mastery in every aspect of big data.

Similar to varying entry points, there are also varying paths for moving up the big data ladder. Firms that have a solid foundation of skill/capacity, typically including elements in each of the three main segments of big data, can begin to explore a range of expansion options. Examples may include:

Industry Vertical Specialization: beyond the basics, most industry verticals require a degree of specialization for effective data analysis. Unique data streams, KPIs, metrics and reporting needs can make one industry vertical significantly different than another. Consider the retail vertical. Most retailers currently rely heavily on transactional data – customer purchases, including the timing of those purchases, the impact of sales or promotions, inventory levels and so forth. Increasingly, retailers seek to factor in social streams of data, customer evaluations and omni-channel behavioral data, for example. Metrics may cover comp sales, inventory turnover, action aisle sales, shrinkage levels and a host of other factors unique to the retail space. Obviously, IT firms that can “talk the language” of retail will be better positioned to understand customer needs and develop a trusted advisor relationship.

Emerging Big Data Technologies Expertise: many industry articles and pundits tend to position Hadoop as the poster child for emerging big data technologies. Indeed, there is a lot of innovation surrounding Hadoop, from new tools and applications that make Hadoop more accessible to more vendors offering integrated solutions that include support across the Hadoop ecosystem. For IT solution providers ready to advance to the next level, some degree of Hadoop expertise may make sense. Keep in mind, Hadoop represents only one facet of big data.

Planning & Consulting: according to CompTIA research, one of the most significant skills gaps associated with data is in the area of strategic planning – the ability to develop mid and longer-term roadmaps for data collection, storage, management and analysis. While a CIO may be equipped to foresee data infrastructure needs, they may struggle in trying to anticipate business intelligence needs across the organization. Data-savvy IT solution providers can bring big picture perspective and help facilitate planning discussions across functional areas, offering insights and guidance along the way. As noted previously, a data audit can be positioned as one of many steps in developing mid and longer-term data strategies.

Developing Differentiators: while the set of pure-play big data firms is relatively small, the broader ecosystem of firms in the data space is quite large. When thinking about potential competitors, it’s a good idea to think broadly. PR firms, survey research firms, brand management firms, marketing firms and business consultants may all be pitching some type of data related services. Consequently, it’s especially important to establish your differentiating characteristics – how do your capabilities differ from a marketing/PR firm, for example, that may be well versed in web analytics, social media analytics and email campaign analytics? Hint: it typically revolves around integration and end-to-end solutions. Moreover, don’t overlook these types of firms as possible partners. A marketing/PR firm may bring a number of useful skillsets to a partner relationship.

ACID: a set of four properties (Atomicity, Consistency, Isolation, and Durability) that, when present, ensure that transactions in a database will be processed reliably. It is a challenge to maintain ACID compliance when addressing availability and performance in web-scale systems.

Big data: the discipline of managing and analyzing data in extremely large volumes, very high velocity, or wide variety.

Business intelligence: a broad term that encompasses various activities designed to provide a business with insight into operations. Data mining, event processing, and benchmarking are activities that fall under the umbrella of business intelligence.

Data audit: the process of reviewing the datasets in use at a business.

Data science: a discipline that incorporates statistics, data visualization, computer programming, data mining, machine learning, database engineering, and business knowledge to solve complex problems.

Hadoop: one of the most common platforms for storing, manipulating, and analyzing Big Data. The two main components are the Hadoop Distributed File System (HDFS) and the MapReduce engine for sorting and analyzing data.

Machine-to-Machine (M2M): communications that take place between devices to collect data or perform operations. If the devices contain algorithms to change behavior based on incoming data, this is known as machine learning.

Metadata: data used to describe data, such as file size or date of creation.

NewSQL: a class of database systems that provides greater scalability while maintaining ACID compliance.

NoSQL: a class of database systems that provides very high scalability and performance, but typically do not guarantee ACID compliance. Primary examples of NoSQL databases include key-value store (such as Cassandra or Dynamo), tabular (such as Hbase), and document store (such as CouchDB or MongoDB).

Predictive analytics: a branch of business intelligence that uses data for future forecasting and modeling.

Relational database management system (RDBMS): the predominant method for storing and analyzing data, often using SQL as the base language. These systems have limitations in scalability and performance when dealing with very large data sets.

Structured data: data that fits a defined format so that it can be placed in an RDBMS for analysis.

Unstructured data: data such as documents, images, or social streams that does not fit into a defined format and cannot be placed into an RDBMS.

For more Big Data terms and definitions, see http://data-informed.com/glossary-of-big-data-terms/

1. Leverage the Hype…But Don’t Get Carried Away

Big data is the topic du jour at companies large and small. Use this opportunity to begin a dialogue on all things data-related, from the hardware and software responsible

for storing and managing data, to the services and security responsible for using and protecting data. Customers are hungry for information and insights, but keep in mind, most are still at the basic and intermediary levels.

2. Augment Existing Expertise

Big data is an expansive category, so don’t expect to master everything. Begin with your core areas of expertise and look for natural extensions. For example: an MSP providing hosted email

may consider adding-on an email campaign management offering, along with the accompanying integration and analytics services.

3. Enhance Your Business Consulting Skills

The days of selling solely to the IT department are fading. The big data trend will accelerate this decline. Line-of-business (LOB) executives, the primary users of data, will increasingly

drive decisions regarding data initiatives.

4. Leverage Existing Vendor Partners; Add New Complementary

Ones

Just about every major vendor has rolled out some type of big data offering. Some will look to entice quality channel partners through additional incentives. Additionally, there has been an incredible amount of data-related innovation

in recent years, boosting the number of start-up vendors in the space. Many are making their first foray into building out a channel program, so may be eager to please for partners willing to accept a few bumps along the way.

5. Bigger is Not Always Better: Think in Terms of Basic Customer

Problems

Only a sliver of the market has true big data problems. Rather, most businesses tend to have lots of small and midsize problems – some basic and some more complex. Lack of real-time reporting, lack of customer insights due

to data silos, lack of mobile access to data, insufficient data loss prevention safeguards, just to name a few. Be sure to consider the problems throughout the entire organization, especially the power-users of data, such as sales teams, and customer

service staff.

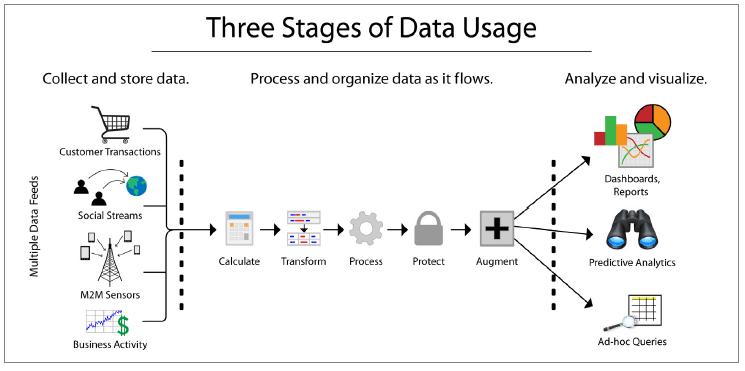

6. Connect the Dots

Many newspaper articles covering big data like to pique the reader’s interest with incredible examples of how big data is changing retail, healthcare, space exploration, or even government. Missing from

this approach is an explanation of the many behind-the-scene components of big data and how a lot needs to happen to generate value from big data. Connect the dots for customers using graphics such as the one found in this Guide and you will be

much better positioned to identify opportunities along the way.

7. Deliver Wins…and Fast

The downside of hype: when high expectations are not met, frustration may ensue, which may then negatively impact future investments. In the case of big data, be cautious when starting with a lengthy,

complex initiative yielding an uncertain payback period. Ideally, a modular approach that allows for a series of deliverables (aka “wins”) over time ensures customers recognize value sooner rather than later. As a reminder, a customer’s

perception of big data ROI will ultimately be determined by quantifiable cost savings or revenue generation.

Big Data Vendors

http://wikibon.org/wiki/v/Big_Data_Vendor_Revenue_and_Market_Forecast_2012-2017

Open Source Big Data Tools

http://www.bigdata-startups.com/open-source-tools/

CRN Big Data 100 – List of 100 vendors in the categories of Data

Management, Data Infrastructure & Services and Data Analytics

http://www.crn.com/news/applications-os/240152891/the-2013-big-data-100.htm

Big Data Companies

http://www.bigdatacompanies.com/directory/

CompTIA’s Big Data Insights and Opportunities research study contains the dual perspective or end-users and channel partners. Additionally, CompTIA studies covering cloud, mobility and security provide a range of data-related insights.

To learn more, visit http://www.comptia.org/insight-tools/technology?tags=security for access to CompTIA’s channel training resources on DRBC.

Quick Start Session for Data Recovery and Business Continuity

10-Week Guide to Business Continuity and Data Recovery

Executive Certificate in Data Recovery and Business Continuity(Foundations)

Read more about Big Data.

Tags : Big Data