Ask 10 IT professionals: “What is cloud computing?” and you may get 10 different responses. Some may cite technical specifications, some may reference a notable cloud service provider, and some may claim the cloud is really just another term for the internet.

The debate over a cloud computing definition reflects its many elements and nuances. The cloud is not one thing but rather a term used to describe a computing model consisting of many moving parts. This model increasingly affects more areas of the technology landscape on more levels than ever before. Cloud computing has and will continue to expand the IT operations and IT utilization options for organizations of all sizes.

Cloud computing definition

Since the beginning of enterprise IT, computing models have constantly evolved. From mainframes to virtualized servers to hosted systems, companies have looked for ways to make their IT architecture more efficient and cost-effective. Cloud computing is the next step in this evolution, and while it bears several similarities to previous models, it also has some unique qualities that open up new capabilities.

In thinking about a cloud computing definition, we have to consider the five characteristics that the National Institute of Standards and Technology (NIST) outline as an essential part of any cloud system:

- Broad network access: This characteristic is common with other models and simply means that cloud services require networked connections between backend infrastructure (such as servers and storage) and frontend clients (such as laptops or smartphones).

- Resource pooling: In many cases, this involves virtualized resources, but in some cases, physical resources themselves are pooled together with a layer of software.

- Rapid elasticity: Here cloud computing services begin to drastically separate from other models. Rather than simply grouping resources into static pools, cloud resources can dynamically grow and shrink depending on the workload demands.

- On-Demand self-service: In contrast to other models that require significant technical expertise to spin up, cloud services have simple methods that allow users with relatively limited technical skills to create or access resources.

- Measured service: Given the dynamic nature of cloud computing, the final characteristic is the ability to measure exactly how much resource is being used, which leads to the ability to charge for exact usage rather than purchasing or renting for a broad period of time.

Initially, companies only leveraged some of the unique characteristics. For instance, a company may have migrated some on-premises applications to a cloud provider, offloading the maintenance work needed for local servers. Over time, companies have begun exploring the more advanced aspects of cloud computing, using flexible development environments to build new applications or implement robust storage solutions.

Benefits of cloud computing

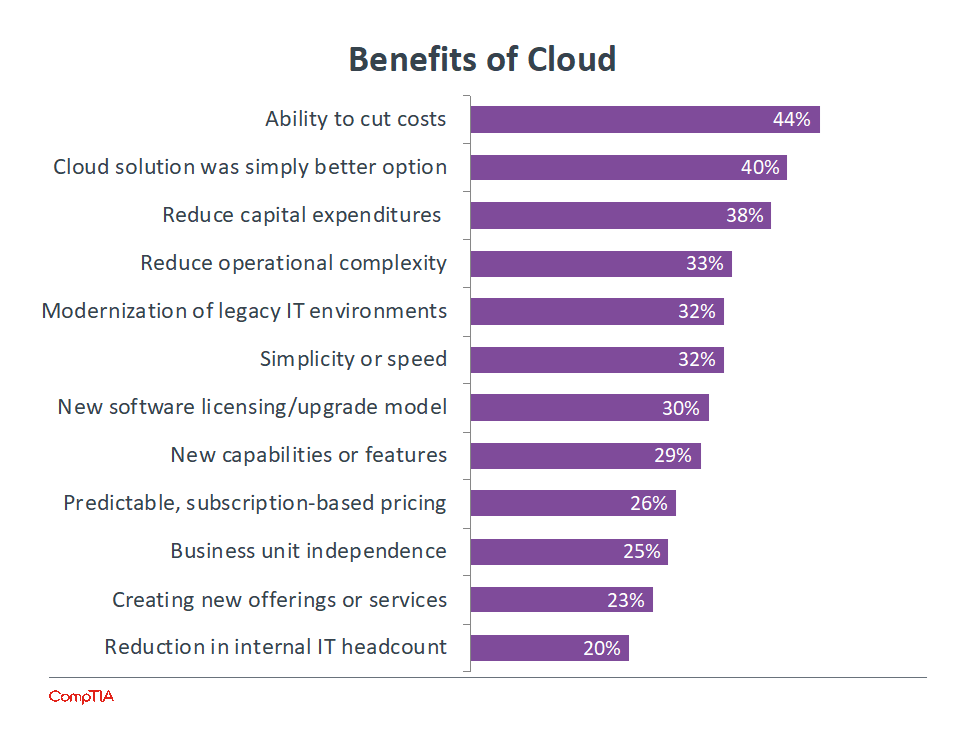

As with most new technology models, the initial benefit that companies look for with cloud computing is the ability to cut costs. For many years, the corporate approach to IT has been to treat it as a cost center, so CIOs and IT pros are always looking for ways to provide the same level of service at lower costs.

While cloud computing can provide direct cost savings in some cases, certain applications can actually be more expensive to run in the cloud, thanks to performance and security requirements. However, cloud computing provides many other benefits that are attractive to companies as they become more strategic with technology.

As technology is integrated more into the business, there are new requirements for how technology is managed. There is more of a shift toward operating expenditure versus capital expenditure, or there is a stronger desire to reduce overhead and operational complexity. Cloud computing helps with these goals, and for many companies, moving to the cloud simply becomes the best infrastructure option.

Types of cloud computing applications

IT systems are really a stack of different components:

- At the bottom is the infrastructure layer, the actual hardware that runs everything.

- Next comes an operating system, allowing software applications to easily access the hardware components.

- Finally, there is the application itself, providing a user interface and performing a specific purpose.

There are cloud offerings for each part of this stack.

What is IaaS?

Infrastructure as a Service (IaaS) offers basic components, giving access to virtualized servers or storage so that end users can build systems from the ground up. Simplified, that means IaaS provides a virtual server that the customer rents from another company that has a data center. IaaS promotes access versus ownership and gives the end user flexibility when it comes to hosting custom-built apps while also providing a general data center for storage.

What is PaaS?

Platform as a Service (PaaS) provides some sort of operating system, allowing end users to avoid some of the steps in organizing infrastructure and move right into software development. A PaaS provider offers a company physical IT infrastructure, such as data centers, servers, storage, and network equipment, plus an intermediate layer of software, which includes tools for building apps. And, of course, a user interface is also part of the package to provide usability.

What is SaaS?

Software as a Service (SaaS) is the final stage, providing an end user with software that typically runs in a browser rather than being hosted locally. This means software can be accessed from any device with an internet connection and web browser. Customers deploy SaaS offerings in a cloud deployment model, as described below.

These three basic offerings have spawned countless other “as a service” solutions. Part of the challenge involved with cloud computing is sorting through these many offerings and figuring out which ones are the best match for the company. Inevitably, the end result will include pieces from multiple parts of the stack.

Along with the primary cloud service types, there are three primary cloud deployment models.

Public cloud

Although many people apply the label cloud computing to any third-party resource, a true instance of public cloud will use a software layer to ensure elasticity and measured self-service rather than simply taking over the manual work involved in standing up IT systems.

Private cloud

Companies can build private clouds using their own IT infrastructure. Once again, the differences between a standard server room and a true private cloud are the unique cloud computing characteristics, and these can be added with a layer of software that a company might build themselves or purchase from a vendor.

Hybrid cloud

A hybrid cloud typically refers to a single application that may be configured across both public cloud resources and a private cloud, using external resources if the workload becomes too great to handle internally. Multi-cloud is a similar term that typically refers to an overall architectural approach where different applications reside on a public cloud model or a private cloud model, depending on the requirements, and the entire architecture must be optimized and managed.

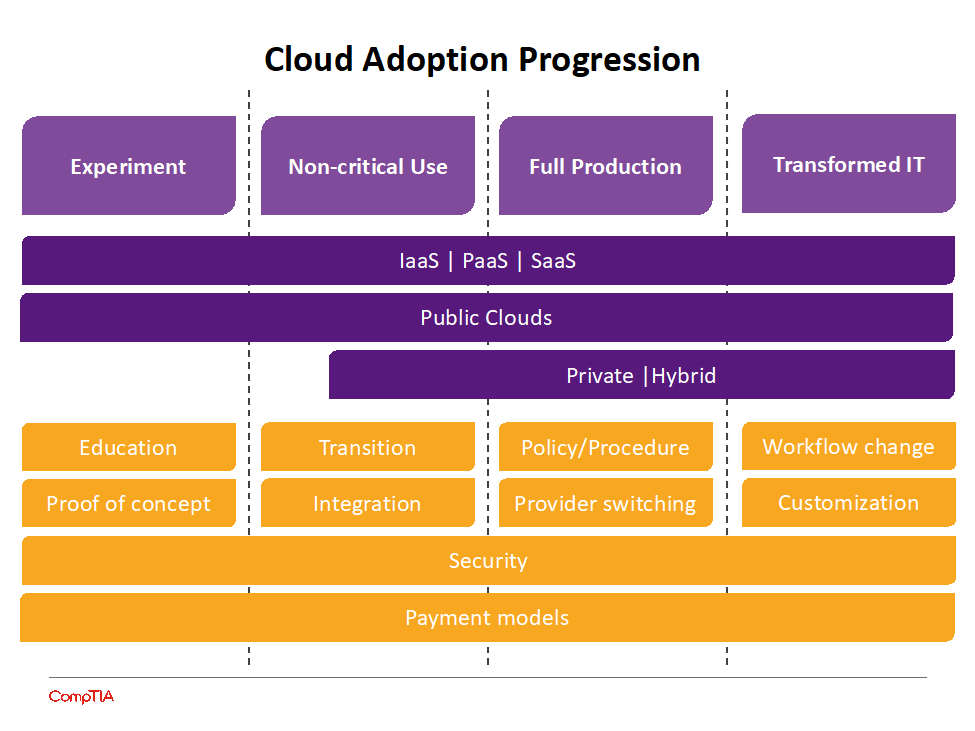

For a technology as transformative as cloud computing, companies will go through stages of adoption:

- The experiment phase is primarily about exploration and education.

- When an organization is ready to take the first step, the non-critical use stage is where they will migrate one of their peripheral systems to the cloud to learn about cloud operations and integration.

- Once they are comfortable understanding the pros and cons, they will move to the full production stage, where they will evaluate each one of their systems to determine where it should be placed in a multi-cloud architecture.

- Finally, they reach the transformed IT stage, where they have not simply migrated legacy applications but have rebuilt pieces as needed to take full advantage of cloud computing capabilities.

This process does not happen overnight. Cloud computing has the potential to transform a business, but it does so by modifying workflow and requiring new skills. Looking at past computing models, it is reasonable to expect that the cloud computing era will last for 20 to 30 years, with businesses evolving over that period and discovering new possibilities in the ways they use technology.

Cloud computing jobs and careers

With technology as far-reaching as cloud computing comes a wide range of jobs that require up-to-date cloud skills. While some of the roles are new positions that focus primarily on cloud usage, most are existing roles that need to add cloud expertise to ongoing responsibilities.

These roles include:

- Server administrator: One of the most common IT roles, the responsibilities of a server administrator include overall management of physical servers, virtual servers, and business systems. This role might also be labeled systems administrator in some companies, and while the exact scope may differ between roles, the general concept is similar. Obviously, in today’s environment, individuals in this role need a solid working knowledge of cloud computing systems as they determine which platform is best for each application and manage the entire architecture.

- Cloud architect: Mostly seen in large companies with extensive needs, cloud architect is a newer role that focuses specifically on cloud resources and less on a traditional server room. Typical tasks might include cloud vendor analysis, private cloud construction, and cloud orchestration. As more IT architectures become combinations of cloud resources and on-premises resources, this role may fade in favor of the more general systems administrator or systems architect.

- Software developer: Some of the greatest disruption caused by cloud computing has been in the software development space, with barriers to entry being removed and workflow changing drastically thanks to new capabilities. The increased demand for software is driving granularity in software positions. Some of the more popular job titles are front-end developer, full stack developer and DevOps engineer.

- Data scientist: Along with an understanding of new data tools and corporate data structures, data scientists must have expertise with cloud systems since cloud resources are practically a requirement for modern data processing and analysis. The first step is leveraging various storage options to create a comprehensive data repository. From there, data scientists typically employ cloud software, especially tools from the catalog of major public cloud providers.

Cloud computing certifications

As with most IT careers, certifications are a valuable tool in demonstrating knowledge of cloud computing. The cloud market is mature enough to have a solid hierarchy of options, from foundational vendor-neutral credentials to advanced vendor-specific certifications. These certifications can benefit both IT professionals seeking career advancement and new job candidates trying to get into IT.

The following list provides some of the most sought-after cloud computing certifications among employers:

- CompTIA Cloud Essentials

- CompTIA Cloud+

- Microsoft Certified: Microsoft Azure Fundamentals

- AWS Certified SysOps Administrator

- Certified Cloud Security Professional (CCSP)

- Google Certified Professional Cloud Architect

- Cisco CCNA Cloud

Whether you’re looking to work in cloud computing or simply want to increase your knowledge on the subject, be sure to check out our Cloud certifications.